The normal vacuum command doesn’t seem to do anything when Timescale is in use. Is there any equivalent command or procedure to shrink database size after data is deleted in various chunks?

Are chunks a kind of partition?

I’m searching too for this topic. I believe this postgresql official link could be useful:

- If you are using manual

VACUUMorANALYZEcommands, don’t forget that you need to run them on each child table individually. A command like:ANALYZE measurement;

will only process the root table.

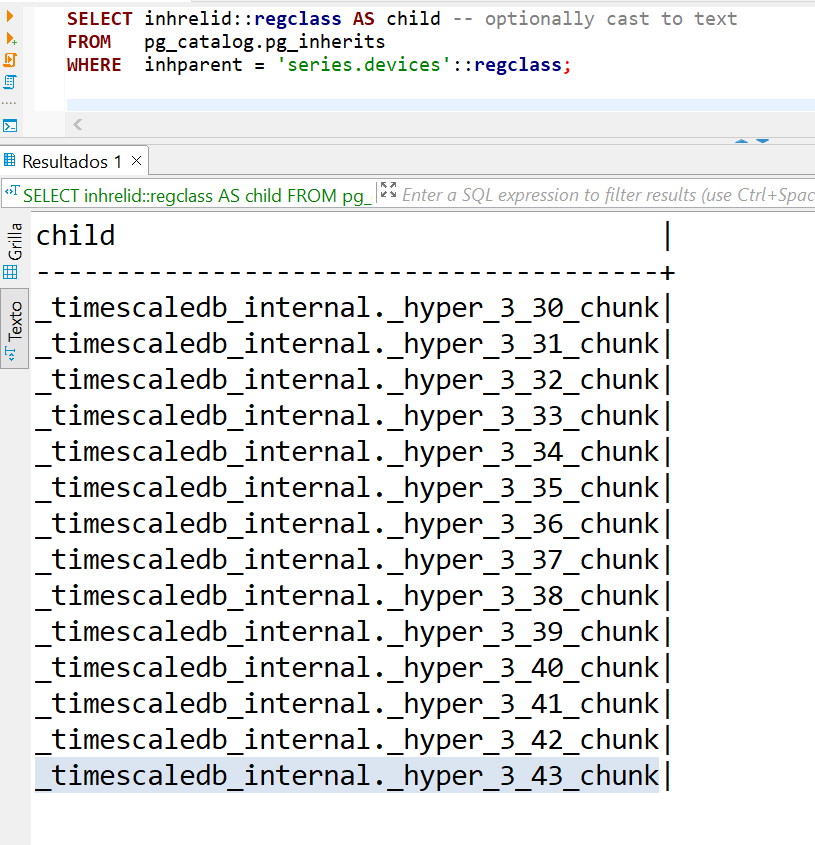

It seems to be the same result that throws the equivalent timescale function:

select * from show_chunks('series.devices');

I’m only a newbie though,

Regards.

That’s really my question… how to properly vacuum the database, and how to do it in one go. I’m sure there must be a way using the extension.

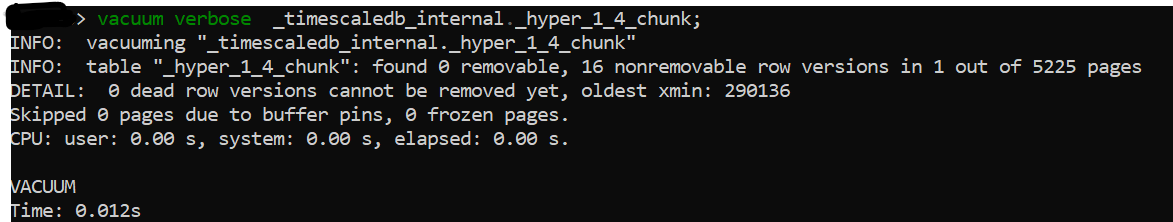

Vacuuming via the hypertable (parent table) should work as expected. If you do a VACUUM VERBOSE... as @nando showed, you should see the output through the console. Feel free to provide more detail about what you’re seeing.

Depending on your use case, one of the advantages of using hypertables is the ability to set data retention policies to just drop chunks as they get older. It sounds like you’re doing more targeted data deletion inside of each chunk, but just in case I wanted to make sure I mentioned that. That is, if you were doing something like

DELETE FROM hypertable WHERE ts < now()-'1 month'::interval,

data retention would be the better approach:

select drop_chunks('hypertable','1 month');

Thanks, I can see that vacuum actually does seem to work, and does take the underlying chunk into account. Thanks for the “verbose” hint.

We do use rentention, and that’s great for overall storage limits… this is however as you write more targeted deletes.

Cool. There are definitely times where targeted deletes are necessary and I get that.

Are you using TimescaleDB compression? Not only does it save space on disk and often speed up historical queries, it’s actually possible to make more efficient deletes of historical data if it’s part of the segmentby argument.

Could explain more if you’re interested.

Out next release (schema version) includes compression setup, but the issue we have right now is the lack of support for deletes/inserts in compressed chunks.

Yep, I understand that. For the record, you can insert into compressed chunks (starting with TimescaleDB 2.2 I think) - but you cannot UPDATE/DELETE yet… at least not directly.

My comment on DELETE is that if you segmantby the relevant column (say something like account_id), then the rows in the compressed chunks are all grouped by account_id. You’re guaranteed that no other data points in that compressed row will contain data for another account_id.

This means it is possible to delete rows from the compressed chunk based on a segmentby column if that would be helpful (you do have to query directly against the compressed hypertable name, you can’t do a delete through the hypertable). Consider something like GDPR for example, if your data was all segmented by account_id and you needed to remove historical, compressed data, you don’t have to decompress the chunk if it’s a global delete like that (independent of time, specifically).

As soon as you try to delete compressed data based on segmentby and another column that is compressed, it’s not possible without decompressing first.

Hope that makes sense. Might not be helpful in your situation, but if we’re talking about VACUUM, that usually implies larger deletes and this might be a useful thing to know.

Found this topic while looking for a solution to make select count(*) from <hypertable> fast, i.e. use index.

I did vacuum the hypertable, but the query still seems to do full scan:

QUERY PLAN |

-----------------------------------------------------------------------------------------------------------+

Finalize Aggregate (cost=1716570.48..1716570.49 rows=1 width=8) |

-> Gather (cost=1716570.27..1716570.48 rows=2 width=8) |

Workers Planned: 2 |

-> Partial Aggregate (cost=1715570.27..1715570.28 rows=1 width=8) |

-> Parallel Append (cost=0.00..1634610.37 rows=32383958 width=0) |

-> Parallel Seq Scan on _hyper_9_72_chunk (cost=0.00..189481.92 rows=4166592 width=0)|

-> Parallel Seq Scan on _hyper_9_67_chunk (cost=0.00..189475.15 rows=4166615 width=0)|

-> Parallel Seq Scan on _hyper_9_68_chunk (cost=0.00..189474.69 rows=4166669 width=0)|

-> Parallel Seq Scan on _hyper_9_66_chunk (cost=0.00..189474.67 rows=4166667 width=0)|

-> Parallel Seq Scan on _hyper_9_69_chunk (cost=0.00..189470.53 rows=4166553 width=0)|

-> Parallel Seq Scan on _hyper_9_70_chunk (cost=0.00..189468.42 rows=4166642 width=0)|

-> Parallel Seq Scan on _hyper_9_71_chunk (cost=0.00..189467.24 rows=4166624 width=0)|

-> Parallel Seq Scan on _hyper_9_73_chunk (cost=0.00..146377.96 rows=3217596 width=0)|

JIT: |

Functions: 12 |

Options: Inlining true, Optimization true, Expressions true, Deforming true |

while the table does have index on the time column:

CREATE INDEX lineorder_ts_idx ON public.lineorder USING btree (ts DESC);

How do I speed up the query?

Hi @novoselov ,

Found this topic while looking for a solution to make

select count(*) from <hypertable>fast, i.e. use index.

This link could be useful.

In general I use, almost ever, hyperfunctions and the toolkit functions instead of the Postgres regular functions. They are great!

Regards.

Thanks for the hints. Unfortunately, I need an exact number. OK, will be investigating further.