Hi,

We have major performance issues with updating rows in compressed chunks.

We have a strong requirement to be able to update older, already compress data (should happen very rarely though). We don’t want to decompress the whole chunk in such case but only update relevant rows.

Until now we have been using TimescaleDB 2.15.3 on PostgreSQL 14. We just upgraded TimescaleDB to the latest version 2.16.0 (which should significantly improve UPSERT speed in compressed chunks), but we don’t notice any difference.

Our hypertable:

CREATE TABLE IF NOT EXISTS dbo.result_double (

mprt_fk INTEGER NOT NULL,

result_timestamp TIMESTAMPTZ NOT NULL,

result_value DOUBLE PRECISION NOT NULL,

status INTEGER NOT NULL,

CONSTRAINT pk_result_double PRIMARY KEY (mprt_fk, result_timestamp)

);

SELECT create_hypertable('dbo.result_double', 'result_timestamp', chunk_time_interval => interval '1 week', if_not_exists => TRUE);

ALTER TABLE dbo.result_double SET (timescaledb.compress, timescaledb.compress_orderby = 'result_timestamp DESC', timescaledb.compress_segmentby = 'mprt_fk');

-- auto compression is disabled, chunks are compressed manually

We have 8 chunks, 4 of them are already compressed. We noticed that updates work very fast if the rows to be updated are not yet compressed. We update rows in hypertable dbo.result_double from a temporary table update_table:

CREATE TEMP TABLE IF NOT EXISTS update_table (

mprt_fk INTEGER,

result_timestamp TIMESTAMPTZ,

new_result_value DOUBLE PRECISION,

new_status INTEGER,

old_result_value DOUBLE PRECISION,

old_status INTEGER

) ;

Update statement:

EXPLAIN (VERBOSE, COSTS, SETTINGS, BUFFERS)

UPDATE dbo.result_double

SET

result_value = t.new_result_value,

status = t.new_status

FROM update_table AS t

WHERE

result_double.mprt_fk = t.mprt_fk

AND result_double.result_timestamp = t.result_timestamp

-- To make chunk pruning work

AND result_double.result_timestamp >= '2023-02-02 00:00:00+01'

AND result_double.result_timestamp <= '2023-02-02 06:00:00+01';

When updating data in not compressed chunks it works very fast, below is the EXPLAIN plan:

Custom Scan (HypertableModify) (cost=0.00..3613.62 rows=1 width=28)

-> Update on dbo.result_double (cost=0.00..3613.62 rows=1 width=28)

Update on _timescaledb_internal._hyper_102_203_chunk result_double_1

Update on _timescaledb_internal._hyper_102_226_chunk result_double_2

-> Nested Loop (cost=0.00..3613.62 rows=1 width=28)

Output: t.new_result_value, t.new_status, t.ctid, result_double.tableoid, result_double.ctid

-> Seq Scan on pg_temp_15.update_table t (cost=0.00..22.70 rows=1270 width=30)

Output: t.new_result_value, t.new_status, t.ctid, t.mprt_fk, t.result_timestamp

-> Append (cost=0.00..2.81 rows=2 width=22)

-> Seq Scan on _timescaledb_internal._hyper_102_203_chunk result_double_1 (cost=0.00..0.00 rows=1 width=22)

Output: result_double_1.mprt_fk, result_double_1.result_timestamp, result_double_1.tableoid, result_double_1.ctid

Filter: ((result_double_1.result_timestamp >= '2023-02-02 00:00:00+01'::timestamp with time zone) AND (result_double_1.result_timestamp <= '2023-02-02 06:00:00+01'::timestamp with time zone) AND (t.mprt_fk = result_double_1.mprt_fk) AND (t.result_timestamp = result_double_1.result_timestamp))

-> Index Scan using 226_215_pk_result_double on _timescaledb_internal._hyper_102_226_chunk result_double_2 (cost=0.57..2.80 rows=1 width=22)

Output: result_double_2.mprt_fk, result_double_2.result_timestamp, result_double_2.tableoid, result_double_2.ctid

Index Cond: ((result_double_2.mprt_fk = t.mprt_fk) AND (result_double_2.result_timestamp = t.result_timestamp) AND (result_double_2.result_timestamp >= '2023-02-02 00:00:00+01'::timestamp with time zone) AND (result_double_2.result_timestamp <= '2023-02-02 06:00:00+01'::timestamp with time zone))

Settings: effective_cache_size = '193302MB', effective_io_concurrency = '256', max_parallel_workers = '32', max_parallel_workers_per_gather = '16', random_page_cost = '1.1', work_mem = '200618kB'

Query Identifier: 414143609897445276

Planning:

Buffers: shared hit=110

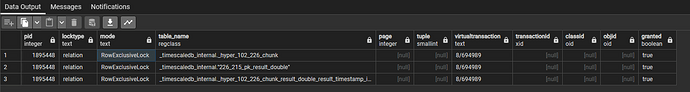

But when we want to update data in already compressed chunks the operation does not finish, not even in 10 mins. This happens also if we want to update only 1 row. Explain plan:

Custom Scan (HypertableModify) (cost=30.89..40686.19 rows=1 width=28)

-> Update on dbo.result_double (cost=30.89..40686.19 rows=1 width=28)

Update on _timescaledb_internal._hyper_102_197_chunk result_double_1

-> Nested Loop (cost=30.89..40686.19 rows=1 width=28)

Output: t.new_result_value, t.new_status, t.ctid, result_double_1.tableoid, result_double_1.ctid

-> Seq Scan on pg_temp_15.update_table t (cost=0.00..22.70 rows=1270 width=30)

Output: t.new_result_value, t.new_status, t.ctid, t.mprt_fk, t.result_timestamp

-> Bitmap Heap Scan on _timescaledb_internal._hyper_102_197_chunk result_double_1 (cost=30.89..32.01 rows=1 width=22)

Output: result_double_1.mprt_fk, result_double_1.result_timestamp, result_double_1.tableoid, result_double_1.ctid

Recheck Cond: ((result_double_1.result_timestamp = t.result_timestamp) AND (result_double_1.result_timestamp >= '2023-01-02 00:00:00+01'::timestamp with time zone) AND (result_double_1.result_timestamp <= '2023-01-02 06:00:00+01'::timestamp with time zone))

Filter: (t.mprt_fk = result_double_1.mprt_fk)

-> Bitmap Index Scan on _hyper_102_197_chunk_result_double_result_timestamp_idx (cost=0.00..30.89 rows=1 width=0)

Index Cond: ((result_double_1.result_timestamp = t.result_timestamp) AND (result_double_1.result_timestamp >= '2023-01-02 00:00:00+01'::timestamp with time zone) AND (result_double_1.result_timestamp <= '2023-01-02 06:00:00+01'::timestamp with time zone))

Settings: effective_cache_size = '193302MB', effective_io_concurrency = '256', max_parallel_workers = '32', max_parallel_workers_per_gather = '16', random_page_cost = '1.1', work_mem = '200618kB'

Query Identifier: 414143609897445276

Planning:

Buffers: shared hit=218

On the other hand “regular” updates that do not join other table work fast, example:

Query:

EXPLAIN (VERBOSE, COSTS, SETTINGS, BUFFERS)

UPDATE dbo.result_double SET STATUS = 1 where mprt_fk = 1115 and result_timestamp >= '2023-01-05 00:00:00+01' and result_timestamp < '2023-01-08 09:00:00+01';

Explain plan:

Custom Scan (HypertableModify) (cost=0.54..103.05 rows=998 width=14)

-> Update on dbo.result_double (cost=0.54..103.05 rows=998 width=14)

Update on _timescaledb_internal._hyper_102_197_chunk result_double_1

Update on _timescaledb_internal._hyper_102_198_chunk result_double_2

-> Result (cost=0.54..103.05 rows=998 width=14)

Output: 1, result_double.tableoid, result_double.ctid

-> Append (cost=0.54..93.07 rows=998 width=10)

-> Index Scan using _hyper_102_197_chunk_result_double_result_timestamp_idx on _timescaledb_internal._hyper_102_197_chunk result_double_1 (cost=0.54..30.26 rows=1 width=10)

Output: result_double_1.tableoid, result_double_1.ctid

Index Cond: ((result_double_1.result_timestamp >= '2023-01-05 00:00:00+01'::timestamp with time zone) AND (result_double_1.result_timestamp < '2023-01-08 09:00:00+01'::timestamp with time zone))

Filter: (result_double_1.mprt_fk = 1115)

-> Seq Scan on _timescaledb_internal._hyper_102_198_chunk result_double_2 (cost=0.00..57.81 rows=997 width=10)

Output: result_double_2.tableoid, result_double_2.ctid

Filter: ((result_double_2.result_timestamp >= '2023-01-05 00:00:00+01'::timestamp with time zone) AND (result_double_2.result_timestamp < '2023-01-08 09:00:00+01'::timestamp with time zone) AND (result_double_2.mprt_fk = 1115))

Settings: effective_cache_size = '193302MB', effective_io_concurrency = '256', max_parallel_workers = '32', max_parallel_workers_per_gather = '16', random_page_cost = '1.1', work_mem = '200618kB'

Query Identifier: 99819599961504784

Planning:

Buffers: shared hit=146

Any help would be highly appreciated