I am looking to aggregate my data into larger timeframes as they get older. Currently I have 15min intervals of data that I wish to aggregate into 1 day averages after 7 days and delete the raw data to save disk space. I have tried to follow this guide to set it up. The only problem is when I query the materialized view the raw data doesn’t appear. Here are the policies I’ve set below. Is this the proper way to summarize the data and be able to query a combination of the raw and aggregated data?

Hey @Graham,

I don’t fully follow what you mean here:

The only problem is when I query the materialized view the raw data doesn’t appear.

Maybe you’re using a UNION to query 15-minute data from the raw table and then joining to daily data from the CAGG? That is, what’s your expectation and an example query.

Thanks!

Ah, and of course I forgot to ask.

What’s the chunk_time_interval of your hypertable set to? (if you didn’t specify anything directly, then it defaults to 7 days). Knowing this and the other information will help.

Maybe I should clarify what I am trying to do. I have 15min data and I wish to aggregate it to 1 day data after 7 days. I want to run a query where data older than a week will be the aggregated day averages and anything newer is the raw 15min data.

Now to address your questions the chunk_time_interval is 7 days and my query joins the materialized view with another table with data that isn’t related to the table I want to summarize.

The view query where daily_average is the aggregate table. Maybe I misunderstood what real time aggregate is, I thought it would allow me to query the raw data from the aggregate table

SELECT *

FROM daily_average a

LEFT JOIN tableB b ON a.columnA::text = b.columnB::text;

Thanks for the feedback.

First, it does sound like there’s been a misunderstanding of how real-time continuous aggregates work. When you query a continuous aggregate, you’re querying the data at that rollup (time_bucket) level. So, if you define the CAGG as:

SELECT time_bucket('1 day', ts) bucket, ...

…then any time you query that CAGG, you’ll only get timestamps of 1-day back. It will never return any raw 15-minute data.

When real-time is enabled (which it is by default), the materialized CAGG data will be appended (through a UNION) with aggregated data from the raw hypertable (your 15-minute data) starting from the end of the materialized data until the present. But again, you’ll only get back 1-day timestamps regardless of having real-time enabled or not.

Does that make sense?

The quickest alternative to achieve what I think you want, is to probably create your own UNION query, has the right timestamp filters in place to pull the daily data, and appends the raw data. Unless I’ve misunderstood.

re: Data retention

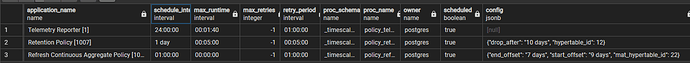

Data retention is done via chunks, not through DELETE. So, while you’ve set the retention to drop data after 10-days, that’s really “any chunk that has data that’s fully 10-days old or more”. TimescaleDB uses the range_end timestamp on the chunk to identify which chunks are eligible to drop.

This means that when you query the raw hypertable, you will likely see data older than 10-days. In your case, if the data retention policy runs every day and you have a 7-day chunk_time_interval, you’ll probably see data for ~13-14 days in the raw hypertable because the range_end of the most recent chunk will take ~3 days to “age out” as it were.

Ok yeah so I’ll need to turn off the real time and then use a query to union the raw data with the aggregated data because the real time is still displaying it in the aggregated day “version” that makes sense to me.

Then for the data retention I think I get what you’re saying. It drops the data by chunk so it waits for the “end” of the chunk to be older than 7 days then drops it so with a 10 day chunk_interval that means a chunk would have to be 14 days old to be deleted.

Thank you so much for the help!

Correct. The date math on the range_end is hard to give an exact answer on, but as an example:

- If a new chunk is created right now, so the

range_end = '2022-07-28 20:56:50'(essentiallynow()+'7 days'::interval) - 10 days from now (

2022-07-31) the policy will look for chunks with anrange_endtimestamp that’s < '2022-07-21…`. The chunk that was created today (in our example) wouldn’t match that for another week +/- a day.

Obviously the creation time of the chunk impacts how this all plays out to some extent.

This is one reason you have the option of changing the chunk_time_interval. If you want to have a little more control over how the data retention policy works, you could change your chunk interval to something like 1 day. This will create more chunks (obviously), but since you’re dropping them after 10 days that probably won’t impact queries much. Sometimes, adding more chunks can impact query performance, although TimescaleDB 2.7 made some major improvements around chunk exclusion.

It’s really dependent on the data you have and the queries you’re running.

Ok this really helped me understand how timescale works, thanks!